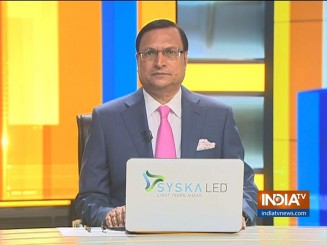

Prime Minister Narendra Modi on Friday expressed serious concern over the emerging threat from “deep fake” videos created through artificial intelligence-powered technology. He was addressing a Diwali Milan program with journalists in Delhi. Modi said, “a new crisis is emerging due to deep fakes produced through artificial intelligence. We have a big section of people who do not have the tools to carry out verification about their authenticity and ultimately people end up believing the videos to be genuine. This is going to become a big challenge.” Modi mentioned how he was targeted in a deep fake video showing he was doing ‘garba’ dance at a Navratri festival. “They did it very well, but the fact is I have not played garba since ages. I used to play garba when I was a child, and I stopped playing after my school days. Because of this fake video made through artificial intelligence, my followers are forwarding this”, the Prime Minister said. Modi said, he had raised the issue with stakeholders in artificial intelligence industry. “I suggested to them that they should consider tagging AI-generated content which is vulnerable to misuse”, he said. Modi said, while new AI technology is making life easier, it can be dangerous too, with the making of ‘deep fake’ videos. Such videos can spoil lives and damage the social fabric, they can create tension in society, and everyone needs to be on guard, he said. Modi said, with most of the Indians using cell phones frequently, any ‘deep fake video’ can be circulated through social media within seconds to millions of people and it can cause a big harm to society. He said, the issue may be a minor one, but the danger is big. In a ‘deep fake’ video, a person’s face or other parts of the body can be superimposed on the face or body parts of another person and can be passed off as genuine. Katrina Kaif, Kajol, Rashmika Mandanna and several other celebrities have been targeted recently through ‘deep fake’ videos. In the Madhya Pradesh assembly elections, fake videos were used by political parties, and both Congress and BJP lodged complaints with the Election Commission. On Friday, after voting was over in MP, chief minister Shivraj Singh Chouhan alleged that voters were misled by circulating fake videos. BJP has lodged at least two dozen complaints with the EC, and most of them relate to fake videos. In one of the fake videos, Chouhan is shown telling ministers and bureaucrats that “people are angry..BJP can lose by big margins..go to every village and booth….go and manage”. The video was of the last cabinet meeting presided over the CM, but the voice superimposed was not that of Chouhan. A voice nearly matching the CM’s was superimposed in the video. Police had to ask social media platforms to remove this fake video. Even, some popular TV show videos were morphed to misguide voters. In one ‘Kaun Banega Crorepati’ video clip, the entire content was changed by superimposing the voices of the host and the contestant. The purpose was to convey to the viewers that Shivraj Singh Chouhan is a “ghoshna mukhyamantri”. Police is unable to trace the origin of this video, but it was widely circulated by Congess supporters. Last month, Sony TV had to issue a clarification describing it as fabricated. It said, “we have been alerted to the circulation of an unauthorized video from our show ‘Kaun Banega Crorepati’. This video misleadingly overlays a fabricated voice-over of our host and presents distorted content….we are actively addressing this matter with the cyber crime cell. We strongly condemn such misinformation, urge our audience to be vigilant, and refrain from sharing unverified content.” Bollywood actor Kartik Aaryan was also targeted in a morphed video by a Congress supporter sporting a blue-tick mark on X. In the fake video, Aaryan was shown endorsing the Congress party in MP elections. The original video featured Kartik in a promotional campaign for a Disney Hotstar ad. This was morphed into a Congress election campaign ad. Kartik Aaryan had to clarify on Twitter saying, “This is the REAL AD @DisneyPlusHS Rest all is Fake.” The morphed video featured the Congress ‘hand’ election symbol. The fake and ‘deep fake’ video problem has now become serious. Artificial Intelligence tools have multiplied the risks. Even the popularity of a mega star like Amitabh Bachchan was misused through fake video to defame chief minister Chouhan. The credibility of a big show like KBC was misused. This is a dangerous trend. By the time, the complaints reached the Election Commission, and FIR was lodged, much damage had been done. These are only a few examples. Most of us get fake and morphed videos on our cell phones, almost on a daily basis. Most of the people do not take them seriously. But those who take these videos seriously have no tools to test their credibility. Personally, I get five to six videos daily by people asking whether they are fake or genuine. India TV has a team which verifies such videos, but the internet is an ocean with its net spread far and wide. It is next to impossible to verify all videos. Secondly, till two or three years ago, it took two or three days to make a fake video, because it requires very hi-definition footage. Now, a software is available which can prepare a fake video in four to five hours. It has now become easier to superimpose a fake voice ly match lip sync. Fake video can damage a person’s image in a matter of few hours. It can incite violence and tension in community. We should understand the danger involved. There is one more disadvantage. If a leader is caught taking bribe or violating laws in a genuine video, he or she can easily claim that the video is fake and can challenge people to conduct a forensic test. It takes months for the forensic report to arrive. Even if the report comes, questions are raised. Therefore, one has to understand the risks involved. Creating awareness among people with the help of cyber experts is essential. We should all be vigilant, and refrain from forwarding doubtful videos without verifying them. As Prime Minister Modi said, putting ‘AI-powered content’ as a mark on such deep fake videos should be the first step. As information technology makes advances, we should become more alert. The responsibility of media is greater. We should keep people informed. Instead of giving credibility to AI and ChatGPT, we should point out such videos as fake. It is our collective responsibility not to allow misuse of technology. We must ensure that the image of any person is not tarnished by use of morphed or ‘deep fake’ videos. I would also like to caution viewers. I have got complaints that some people are selling medicines using my picture, some are promising employment in media using my images, somebody even sent fake phone messages in my name. My office has sent complaints to the police in all such matters. I will ask all of you to remain alert and vigilant. Do not trust fake ads. Inform India TV, if required, whenever you see such fake ads. Verify all such videos and ads. Trust only those messages, posts and videos that are posted on my handle or or India TV’s official handle. They are verified and you can trust them.

Prime Minister Narendra Modi on Friday expressed serious concern over the emerging threat from “deep fake” videos created through artificial intelligence-powered technology. He was addressing a Diwali Milan program with journalists in Delhi. Modi said, “a new crisis is emerging due to deep fakes produced through artificial intelligence. We have a big section of people who do not have the tools to carry out verification about their authenticity and ultimately people end up believing the videos to be genuine. This is going to become a big challenge.” Modi mentioned how he was targeted in a deep fake video showing he was doing ‘garba’ dance at a Navratri festival. “They did it very well, but the fact is I have not played garba since ages. I used to play garba when I was a child, and I stopped playing after my school days. Because of this fake video made through artificial intelligence, my followers are forwarding this”, the Prime Minister said. Modi said, he had raised the issue with stakeholders in artificial intelligence industry. “I suggested to them that they should consider tagging AI-generated content which is vulnerable to misuse”, he said. Modi said, while new AI technology is making life easier, it can be dangerous too, with the making of ‘deep fake’ videos. Such videos can spoil lives and damage the social fabric, they can create tension in society, and everyone needs to be on guard, he said. Modi said, with most of the Indians using cell phones frequently, any ‘deep fake video’ can be circulated through social media within seconds to millions of people and it can cause a big harm to society. He said, the issue may be a minor one, but the danger is big. In a ‘deep fake’ video, a person’s face or other parts of the body can be superimposed on the face or body parts of another person and can be passed off as genuine. Katrina Kaif, Kajol, Rashmika Mandanna and several other celebrities have been targeted recently through ‘deep fake’ videos. In the Madhya Pradesh assembly elections, fake videos were used by political parties, and both Congress and BJP lodged complaints with the Election Commission. On Friday, after voting was over in MP, chief minister Shivraj Singh Chouhan alleged that voters were misled by circulating fake videos. BJP has lodged at least two dozen complaints with the EC, and most of them relate to fake videos. In one of the fake videos, Chouhan is shown telling ministers and bureaucrats that “people are angry..BJP can lose by big margins..go to every village and booth….go and manage”. The video was of the last cabinet meeting presided over the CM, but the voice superimposed was not that of Chouhan. A voice nearly matching the CM’s was superimposed in the video. Police had to ask social media platforms to remove this fake video. Even, some popular TV show videos were morphed to misguide voters. In one ‘Kaun Banega Crorepati’ video clip, the entire content was changed by superimposing the voices of the host and the contestant. The purpose was to convey to the viewers that Shivraj Singh Chouhan is a “ghoshna mukhyamantri”. Police is unable to trace the origin of this video, but it was widely circulated by Congess supporters. Last month, Sony TV had to issue a clarification describing it as fabricated. It said, “we have been alerted to the circulation of an unauthorized video from our show ‘Kaun Banega Crorepati’. This video misleadingly overlays a fabricated voice-over of our host and presents distorted content….we are actively addressing this matter with the cyber crime cell. We strongly condemn such misinformation, urge our audience to be vigilant, and refrain from sharing unverified content.” Bollywood actor Kartik Aaryan was also targeted in a morphed video by a Congress supporter sporting a blue-tick mark on X. In the fake video, Aaryan was shown endorsing the Congress party in MP elections. The original video featured Kartik in a promotional campaign for a Disney Hotstar ad. This was morphed into a Congress election campaign ad. Kartik Aaryan had to clarify on Twitter saying, “This is the REAL AD @DisneyPlusHS Rest all is Fake.” The morphed video featured the Congress ‘hand’ election symbol. The fake and ‘deep fake’ video problem has now become serious. Artificial Intelligence tools have multiplied the risks. Even the popularity of a mega star like Amitabh Bachchan was misused through fake video to defame chief minister Chouhan. The credibility of a big show like KBC was misused. This is a dangerous trend. By the time, the complaints reached the Election Commission, and FIR was lodged, much damage had been done. These are only a few examples. Most of us get fake and morphed videos on our cell phones, almost on a daily basis. Most of the people do not take them seriously. But those who take these videos seriously have no tools to test their credibility. Personally, I get five to six videos daily by people asking whether they are fake or genuine. India TV has a team which verifies such videos, but the internet is an ocean with its net spread far and wide. It is next to impossible to verify all videos. Secondly, till two or three years ago, it took two or three days to make a fake video, because it requires very hi-definition footage. Now, a software is available which can prepare a fake video in four to five hours. It has now become easier to superimpose a fake voice ly match lip sync. Fake video can damage a person’s image in a matter of few hours. It can incite violence and tension in community. We should understand the danger involved. There is one more disadvantage. If a leader is caught taking bribe or violating laws in a genuine video, he or she can easily claim that the video is fake and can challenge people to conduct a forensic test. It takes months for the forensic report to arrive. Even if the report comes, questions are raised. Therefore, one has to understand the risks involved. Creating awareness among people with the help of cyber experts is essential. We should all be vigilant, and refrain from forwarding doubtful videos without verifying them. As Prime Minister Modi said, putting ‘AI-powered content’ as a mark on such deep fake videos should be the first step. As information technology makes advances, we should become more alert. The responsibility of media is greater. We should keep people informed. Instead of giving credibility to AI and ChatGPT, we should point out such videos as fake. It is our collective responsibility not to allow misuse of technology. We must ensure that the image of any person is not tarnished by use of morphed or ‘deep fake’ videos. I would also like to caution viewers. I have got complaints that some people are selling medicines using my picture, some are promising employment in media using my images, somebody even sent fake phone messages in my name. My office has sent complaints to the police in all such matters. I will ask all of you to remain alert and vigilant. Do not trust fake ads. Inform India TV, if required, whenever you see such fake ads. Verify all such videos and ads. Trust only those messages, posts and videos that are posted on my handle or or India TV’s official handle. They are verified and you can trust them.